Weihao Cui, Mengze Wei, Quan Chen, Xiaoxin Tang, Jingwen Leng, Li Li, Minyi Guo

In Proceedings of IEEE International Conference on Computer Design (ICCD), Oct. 2019

ABSTRACT

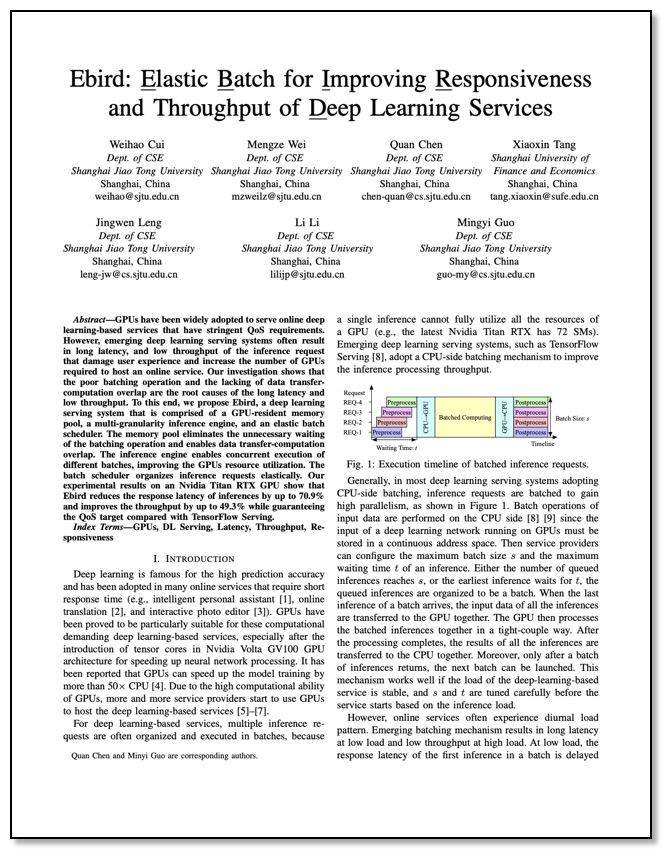

GPUs have been widely adopted to serve online deep learning-based services that have stringent QoS requirements. However, emerging deep learning serving systems often result in long latency, and low throughput of the inference request that damage user experience and increase the number of GPUs required to host an online service. Our investigation shows that the poor batching operation and the lacking of data transfer- computation overlap are the root causes of the long latency and low throughput. To this end, we propose Ebird, a deep learning serving system that is comprised of a GPU-resident memory pool, a multi-granularity inference engine, and an elastic batch scheduler. The memory pool eliminates the unnecessary waiting of the batching operation and enables data transfer-computation overlap. The inference engine enables concurrent execution of different batches, improving the GPUs resource utilization. The batch scheduler organizes inference requests elastically. Our experimental results on an Nvidia Titan RTX GPU show that Ebird reduces the response latency of inferences by up to 70.9% and improves the throughput by up to 49.3% while guaranteeing the QoS target compared with TensorFlow Serving.