Size Zheng, Siyuan Chen, Peidi Song, Renze Chen, Xiuhong Li, Shengen Yan, Dahua Lin, Jingwen Leng, Yun Liang

In Proceedings of International Symposium on High Performance Computer Architecture (HPCA), 2023

ABSTRACT

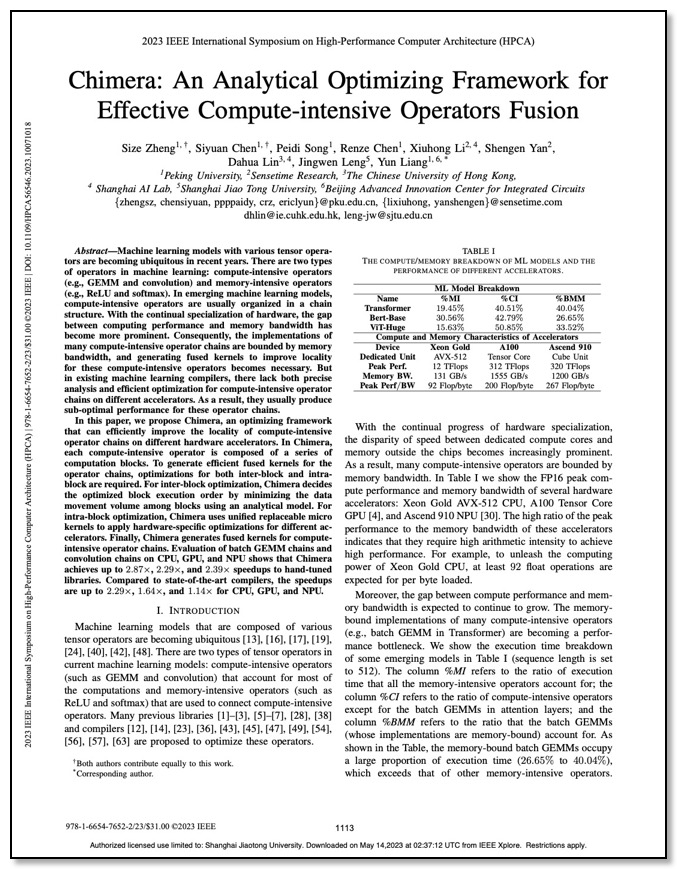

Machine learning models with various tensor operators are becoming ubiquitous in recent years. There are two types of operators in machine learning: compute-intensive operators (e.g., GEMM and convolution) and memory-intensive operators (e.g., ReLU and softmax). In emerging machine learning models, compute-intensive operators are usually organized in a chain structure. With the continual specialization of hardware, the gap between computing performance and memory bandwidth has become more prominent. Consequently, the implementations of many compute-intensive operator chains are bounded by memory bandwidth, and generating fused kernels to improve locality for these compute-intensive operators becomes necessary. But in existing machine learning compilers, there lack both precise analysis and efficient optimization for compute-intensive operator chains on different accelerators. As a result, they usually produce sub-optimal performance for these operator chains.In this paper, we propose Chimera, an optimizing framework that can efficiently improve the locality of compute-intensive operator chains on different hardware accelerators. In Chimera, each compute-intensive operator is composed of a series of computation blocks. To generate efficient fused kernels for the operator chains, optimizations for both inter-block and intra-block are required. For inter-block optimization, Chimera decides the optimized block execution order by minimizing the data movement volume among blocks using an analytical model. For intra-block optimization, Chimera uses unified replaceable micro kernels to apply hardware-specific optimizations for different accelerators. Finally, Chimera generates fused kernels for compute-intensive operator chains. Evaluation of batch GEMM chains and convolution chains on CPU, GPU, and NPU shows that Chimera achieves up to 2.87×, 2.29×, and 2.39× speedups to hand-tuned libraries. Compared to state-of-the-art compilers, the speedups are up to 2.29×, 1.64×, and 1.14× for CPU, GPU, and NPU.